A survey of more than 2,000 smartphone users by second-hand smartphone marketplace SellCell found that 73% of iPhone users and a whopping 87% of Samsung Galaxy users felt that AI adds little to no value to their smartphone experience.

SellCell only surveyed users with an AI-enabled phone – thats an iPhone 15 Pro or newer or a Galaxy S22 or newer. The survey doesn’t give an exact sample size, but more than 1,000 iPhone users and more than 1,000 Galaxy users were involved.

Further findings show that most users of either platform would not pay for an AI subscription: 86.5% of iPhone users and 94.5% of Galaxy users would refuse to pay for continued access to AI features.

From the data listed so far, it seems that people just aren’t using AI. In the case of both iPhone and Galaxy users about two-fifths of those surveyed have tried AI features – 41.6% for iPhone and 46.9% for Galaxy.

So, that’s a majority of users not even bothering with AI in the first place and a general disinterest in AI features from the user base overall, despite both Apple and Samsung making such a big deal out of AI.

People here like to shit on AI, but it has its use cases. It’s nice that I can search for “horse” in Google Photos and get back all pictures of horses and it is also really great for creating small scripts. I, however, do not need a LLM chatbot on my phone and I really don’t want it everywhere in every fucking app with a subscription model.

People wouldn’t shit on AI if it wasn’t needlessly crammed down our throats.

people wouldn’t shit on AI if it were actually replacing our jobs without taking our pay and creating a system of resource management free from human greed and error.

The only thing is Google photos did that before AI was installed. Now I have to press two extra buttons to get to the old search method instead of using the new AI because the AI gives me the most bizarre results when I use it.

Exactly. My results with Gemini search are worse every single time

You type “horse” into google pictures and you get a bunch of AI generated pictures of what the model thinks horses look like.

I love me some self-hosted ML models, such as Fooocus.

Although I think Steve Jobs was a real piece of shit, his product instincts were often on point, and his message in this video really stuck with me. I think companies shoehorning AI in everything would do well to start with something useful they want to enable and work backwards to the technology as he described here:

https://m.youtube.com/watch?v=48j493tfO-o

AI is a bad idea completely and if people cared at all about their privacy they should disable it.

It’s all well and good to say that AI categorises pictures and allows you to search for people and places in them, but how do you think that is accomplished? The AI scan and remembers every picture on your phone. Same with email summaries. It’s reading your emails too.

The ONLY assurance that this data isn’t being sent back to HQ is the companies word that it isn’t. And being closed source we have no possible way of auditing their use of data to see if that’s true.

Do you trust Apple and/or Google? Because you shouldn’t.

Especially now when setting up a new AI capable iPhone or iPad Apple Intelligence is enabled by DEFAULT.

It should be OPT-IN, not opt-out.

All AI can ever really do is invade your privacy and make humans even more stupid than they are already. Now they don’t even have to go to a search engine to look for things. They ask the AI and blindly believe what ever bullshit it regurgitates at them.

AI is dangerous on many levels because soon it will be deciding who gets hired for a new job, who gets seen first in the ER and who gets that heart transplant, and who dies.

With enough RAM and ideally a good GPU you can run smaller models (~8B Parameters) locally on your own device.

“PLEASE use our hilariously power inefficient wrongness machine.”

please burst that bubble already so i can get a cheap second hand server grade gpu

Do I use Gen AI extensively?…

No but, do I find it useful?..…

Also no.

Doesn’t help that I don’t know what this “AI” is supposed to be doing on my phone.

Touch up a few photos on my phone? Ok go ahead, ill turn it off when I want a pure photography experience (or use a DSLR).

Text prediction? Yeah why not… I mean, is it the little things like that?

So it feels like either these companies dont know how to use “AI” or they dont know how to market it… or more likely they know one way to market it and the marketing department is driving the development. Im sure theres good uses but it seems like they dont want to put in the work and just give us useless ones.Useless for us, but not for them. They want us to use them like personalised confidante-bots so they can harvest our most intimate data

I recently got apple intelligence on my phone, and i had to google around to see what it really does. i couldn’t quite figure it out to be honest. I think it is related to siri somehow (which i have turned off, because why would that be on?) and apparently it could tie into an apple watch (which i don’t have), so i eventually concluded that it doesn’t do anything as of right now. Might be wrong though.

AI is not there to be useful for you. It is there to be useful for them. It is a perfect tool for capturing every last little thought you could have and direct to you perfectly on what they can sell you.

It’s basically one big way to sell you shit. I promise we will follow the same path as most tech. It’ll be useful for some stuff and in this case it’s being heavily forced upon us whether we like it or not. Then it’s usefulness will be slowly diminished as it’s used more heavily to capitalize on your data, thoughts, writings, code, and learn how to suck every last dollar from you whether you’re at work or at home.

It’s why DeepSeek spent so little and works better. They literally were just focusing on the tech.

All these billions are not just being spent on hardware or better optimized software. They are being spent on finding the best ways to profit from these AI systems. It’s why they’re being pushed into everything.

You won’t have a choice on whether you want to use it or not. It’ll soon by the only way to interact with most systems even if it doesn’t make sense.

Mark my words. When Google stops standard search on their home page and it’s a fucking AI chat bot by default. We are not far off from that.

It’s not meant to be useful for you.

Yes, it seems like no one even read the damn user agreement. AI just adds another level to our surveillance state. Its only there to collect information about you and to figure out the inner workings of its users minds to sell ads. Gemini even listens to your conversations if you have the quick access toggle enabled.

DeepSeek cost so little because they were able to use the billions that OpenAI and others spent and fed that into their training. DeepSeek would not exist (or would be a lot more primitive) if it weren’t for OpenAI.

That’s not how these models work. It’s not like OpenAI was sharing all their source code. If anything OpenAI benefits from DeepSeek because they released their entire code.

OpenAI is an ironic name now ever since Microsoft became a majority share holder. They are anything but “open”.

I side graded from a iPhone 12 to an Xperia as a toy to tinker around with recently and I disabled Gemini on my phone not long after it let me join the beta.

Everything seemed half baked. Not only were the awnsers meh and it felt like an invasion of privacy after reading to user agreement. Gemini can’t even play a song on your phone, or get you directions home, what an absolute joke.

Ironically, on my Xperia 1 VI (which I specifically chose as my daily driver because of all the compromises on flagship phones from other brands) I had the only experience where I actually felt like a smartphone feature based on machine learning helped my experience, even though the Sony phones had practically no marketing with the AI buzzwords at all.

Sony actually trained a machine learning model for automatically identifying face and eye location for human and animal subjects in the built-in camera app, in order to be able to keep the face of your subject in focus at all time regardless how they move around. Allegedly it’s a very clever solution trained for identifying skeletal position to in turn identify head and eye positions, it works particularly well for when your subject moves around quickly which is where this is especially helpful.

And it works so incredibly well, wayyyyy better than any face tracking I had on any other smartphone or professional camera, it made it so so much easier for me to take photos and videos of my super active kitten and pet mice lol

That’s pretty neat, I think that’s a great example of how machine learning being useful for everyday activities. Face detection on cameras has been a big issue ever since the birth of digital photography. I’m using a Japanese 5 III that I picked up for $130 and its been great. I’ve heard of being able to side load camera apps from other Xperias onto the 5 III so I’ll give it a try.

I think Sony makes great hardware and their phones have some classy designs and I’m also a fan of their DSLR’S. I’ve always admired there phones going back to the Ericson Walkmans, their designs have aged amazingly. I apreciate how close to stock Sony’s Xperia phones are, I dont like UI’s and bloatware you cant remove. My last Android phone a Galaxy S III was terrible in that regard and put me off from buying another Android until recently. I was actually thinking about getting a 1 VI as my next phone and install lineage on it now that I’m ready to commit.

I totally agree with the AOSP-like ROM and I love it so much too, especially since Sony also makes it super straightforward to root (took me less than 10 minutes) with no artificial function limitations after root (unlike the Samsung models where you can even root at all), so a highly AOSP-like ROM also means a lot of the cook OS customization tools originally developed for Pixel phones, where most of such community development efforts are focused on, tend to mostly work too on th Xperia phones :p

For side-loading Sony native apps from other models, I tried the old pro video recording app from previous gen (the Cinema Pro) on my Xperia 1 VI just for curiosity (since the new unified camera app with all the pro camera and pro video features included in a single app is definitely an usability improvement lol), and it worked fine, so it might work too if you side-load the new camera app onto your older model, feel free to DM me if you’re interested to experiment with this and I can try the various methods for exporting that app and send to you.

Although Lineage OS is not yet available for the gen VI model since it only came out in 2024, however the previous gen V model got its first Lineage OS release in around September, 2024, so it might not take that long to get Lineage OS for the gen VI model :D

I bought this 5 III with the expectation of flashing lineage onto it. The hold up is that japanese carriers like Docomo lock the bootloader. There was to unlock the bootloader by using a paid service from a company called Canadian Wireless that send you a service code that unlocks these Japanese Xperia phones. What I didn’t know was that Sony killed the servers that send the unlock code back in last June so now I’m stuck on the stock ROM. No biggie though, like you’ve been saying the stock ROM is close enough to ASOP ROM that its not that big of a deal. Having security updates would be preferable though.

Hopefully by the time I upgrade to the 1 VI lineage will be available. Until then I’m happy with what I got.

I’m totally interested in trying out the newer camera app. The camera app that comes with the 5 III isn’t very good, face detection isny very good and auto mode isnt great. I just haven’t gotten around to looking for the APK’s from the newer phones so that would save me a lot of time.

Thanks, I’ll send you a DM!

Tbf most people have no clue how to use it nor even understand what “AI” even is.

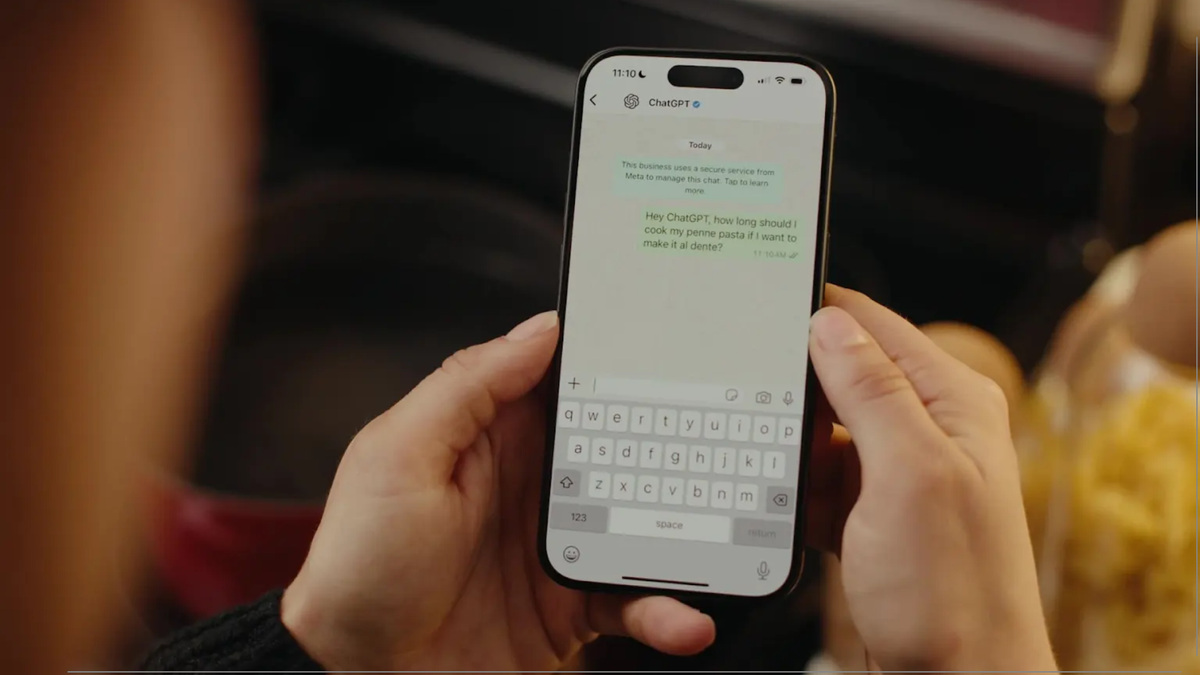

I just taught my mom how to use circle to search and it’s a real game changer for her. She can quickly lookup on-screen items (like plants shes reading about) from an image and the on-screen translation is incredible.

Also circle to search gets around link and text copy blocking giving you back the same freedoms you had on a PC.

Personally I’d never go back to a phone without circle to search - its so under-rated and a giant shift in smartphone capabilities.

Its very likely that we’ll have full live screen reading assistants in the near future which can perform circle to search like functions and even visual modifications live. It’s easy to dismiss this as a gimmick but there’s a lot of incredible potential here especially for casual and older users.

Google Lens already did that though, all you need is decent OCR and an image classification model (which is a precursor to the current “AI” hype, but actually useful).

That is still AI though…

Image classification model isn’t really “AI” the way it’s marketed right now. If Google used an image classification model to give you holiday recommendations or answer general questions, everyone would immediately recognize they use it wrong. But use a token prediction model for purposes totally unrelated to predicting the next token and people are like “ChatGPT is my friend who tells me what to put on pizza and there’s nothing strange about that”.

It is AI in every sense of the word tho. Maybe you’re confusing it with LLM?

Ye, students are currently one of the few major benefactors of LLMs lol.

Not sure students are necessarily benefiting? The point of education isn’t to hand in completed assignments. Although my wife swears that the Duolingo AI is genuinely helping her with learning French so I guess maybe, depending on how it’s being used

I think the article is missing the point on two levels.

First is the significance of this data, or rather lack of significance. The internet existed for 20-some years before the majority of people felt they had a use for it. AI is similarly in a finding-its-feet phase where we know it will change the world but haven’t quite figured out the details. After a period of increased integration into our lives it will reach a tipping point where it gains wider usage, and we’re already very close to that.

Also they are missing what I would consider the two main reasons people don’t use it yet.

First, many people just don’t know what to do with it (as was the case with the early internet). The knowledge/imagination/interface/tools aren’t mature enough so it just seems like a lot of effort for minimal benefits. And if the people around you aren’t using it, you probably don’t feel the need.

Second reason is that the thought of it makes people uncomfortable or downright scared. Quite possibly with good reason. But even if it all works out well in the end, what we’re looking at is something that will drive the pace of change beyond what human nature can easily deal with. That’s already a problem in the modern world but we aint seen nothing yet. The future looks impossible to anticipate, and that’s scary. Not engaging with AI is arguably just hiding your head in the sand, but maybe that beats contemplating an existential terror that you’re powerless to stop.

I want a voice assistant that can set timers for me and search the internet maybe play music from an app I select. I only ever use it when I am cooking something and don’t have my hands free to do those things.

It’s possible that people don’t realize what is AI and what is an AI marketing speak out there nowadays.

For a fully automated Her-like experience, or Ironman style Jarvis? That would be rad. But we have not really close to that at all. It sort of exists with LLM chat, but the implementation on phones is not even close to being there.

maybe if it was able to do anything useful (like tell me where specific settings that I can’t remember the name of but know what they do are on my phone) people would consider them slightly helpful. But instead of making targeted models that know device specific information the companies insist on making generic models that do almost nothing well.

If the model was properly integrated into the assistant AND the assistant properly integrated into the phone AND the assistant had competent scripting abilities (looking at you Google, filth that broke scripts relying on recursion) then it would probably be helpful for smart home management by being able to correctly answer “are there lights on in rooms I’m not?” and respond with something like “yes, there are 3 lights on. Do you want me to turn them off”. But it seems that the companies want their products to fail. Heck if the assistant could even do a simple on device task like “take a one minute video and send it to friend A” or “strobe the flashlight at 70 BPM” or “does epubfile_on_device mention the cheeto in office” or even just know how itis being ran (Gemini when ran from the Google assistant doesn’t).

edit: I suppose it might be useful to waste someone else’s time.