I think AI is neat.

Knowing that LLMs are just “parroting” is one of the first steps to implementing them in safe, effective ways where they can actually provide value.

They’re kind of right. LLMs are not general intelligence and there’s not much evidence to suggest that LLMs will lead to general intelligence. A lot of the hype around AI is manufactured by VCs and companies that stand to make a lot of money off of the AI branding/hype.

Depends on what you mean by general intelligence. I’ve seen a lot of people confuse Artificial General Intelligence and AI more broadly. Even something as simple as the K-nearest neighbor algorithm is artificial intelligence, as this is a much broader topic than AGI.

Wikipedia gives two definitions of AGI:

An artificial general intelligence (AGI) is a hypothetical type of intelligent agent which, if realized, could learn to accomplish any intellectual task that human beings or animals can perform. Alternatively, AGI has been defined as an autonomous system that surpasses human capabilities in the majority of economically valuable tasks.

If some task can be represented through text, an LLM can, in theory, be trained to perform it either through fine-tuning or few-shot learning. The question then is how general do LLMs have to be for one to consider them to be AGIs, and there’s no hard metric for that question.

I can’t pass the bar exam like GPT-4 did, and it also has a lot more general knowledge than me. Sure, it gets stuff wrong, but so do humans. We can interact with physical objects in ways that GPT-4 can’t, but it is catching up. Plus Stephen Hawking couldn’t move the same way that most people can either and we certainly wouldn’t say that he didn’t have general intelligence.

I’m rambling but I think you get the point. There’s no clear threshold or way to calculate how “general” an AI has to be before we consider it an AGI, which is why some people argue that the best LLMs are already examples of general intelligence.

Depends on what you mean by general intelligence. I’ve seen a lot of people confuse Artificial General Intelligence and AI more broadly. Even something as simple as the K-nearest neighbor algorithm is artificial intelligence, as this is a much broader topic than AGI.

Well, I mean the ability to solve problems we don’t already have the solution to. Can it cure cancer? Can it solve the p vs np problem?

And by the way, wikipedia tags that second definition as dubious as that is the definition put fourth by OpenAI, who again, has a financial incentive to make us believe LLMs will lead to AGI.

Not only has it not been proven whether LLMs will lead to AGI, it hasn’t even been proven that AGIs are possible.

If some task can be represented through text, an LLM can, in theory, be trained to perform it either through fine-tuning or few-shot learning.

No it can’t. If the task requires the LLM to solve a problem that hasn’t been solved before, it will fail.

I can’t pass the bar exam like GPT-4 did

Exams often are bad measures of intelligence. They typically measure your ability to consume, retain, and recall facts. LLMs are very good at that.

Ask an LLM to solve a problem without a known solution and it will fail.

We can interact with physical objects in ways that GPT-4 can’t, but it is catching up. Plus Stephen Hawking couldn’t move the same way that most people can either and we certainly wouldn’t say that he didn’t have general intelligence.

The ability to interact with physical objects is very clearly not a good test for general intelligence and I never claimed otherwise.

I know the second definition was proposed by OpenAI, who obviously has a vested interest in this topic, but that doesn’t mean it can’t be a useful or informative conceptualization of AGI, after all we have to set some threshold for the amount of intelligence AI needs to display and in what areas for it to be considered an AGI. Their proposal of an autonomous system that surpasses humans in economically valuable tasks is fairly reasonable, though it’s still pretty vague and very much debatable, which is why this isn’t the only definition that’s been proposed.

Your definition is definitely more peculiar as I’ve never seen anyone else propose something like it, and it also seems to exclude humans since you’re referring to problems we can’t solve.

The next question then is what problems specifically AI would need to solve to fit your definition, and with what accuracy. Do you mean solve any problem we can throw at it? At that point we’d be going past AGI and now we’re talking about artificial superintelligence…

Not only has it not been proven whether LLMs will lead to AGI, it hasn’t even been proven that AGIs are possible.

By your definition AGI doesn’t really seem possible at all. But of course, your definition isn’t how most data scientists or people in general conceptualize AGI, which is the point of my comment. It’s very difficult to put a clear-cut line on what AGI is or isn’t, which is why there are those like you who believe it will never be possible, but there are also those who argue it’s already here.

No it can’t. If the task requires the LLM to solve a problem that hasn’t been solved before, it will fail.

Ask an LLM to solve a problem without a known solution and it will fail.

That’s simply not true. That’s the whole point of the concept of generalization in AI and what the few-shot and zero-shot metrics represent - LLMs solving problems represented in text with few or no prior examples by reasoning beyond what they saw in the training data. You can actually test this yourself by simply signing up to use ChatGPT since it’s free.

Exams often are bad measures of intelligence. They typically measure your ability to consume, retain, and recall facts. LLMs are very good at that.

So are humans. We’re also deterministic machines that output some action depending on the inputs we get through our senses, much like an LLM outputs some text depending on the inputs it received, plus as I mentioned they can reason beyond what they’ve seen in the training data.

The ability to interact with physical objects is very clearly not a good test for general intelligence and I never claimed otherwise.

I wasn’t accusing you of anything, I was just pointing out that there are many things we can argue require some degree of intelligence, even physical tasks. The example in the video requires understanding the instructions, the environment, and how to move the robotic arm in order to complete new instructions.

I find LLMs and AGI interesting subjects and was hoping to have a conversation on the nuances of these topics, but it’s pretty clear that you just want to turn this into some sort of debate to “debunk” AGI, so I’ll be taking my leave.

Yes refreshing to see someone a little literate here thanks for fighting the misinformation man

Yeah this sounds about right. What was OP implying I’m a bit lost?

I believe they were implying that a lot of the people who say “it’s not real AI it’s just an LLM” are simply parroting what they’ve heard.

Which is a fair point, because AI has never meant “general AI”, it’s an umbrella term for a wide variety of intelligence like tasks as performed by computers.

Autocorrect on your phone is a type of AI, because it compares what words you type against a database of known words, compares what you typed to those words via a “typo distance”, and adds new words to it’s database when you overrule it so it doesn’t make the same mistake.It’s like saying a motorcycle isn’t a real vehicle because a real vehicle has two wings, a roof, and flies through the air filled with hundreds of people.

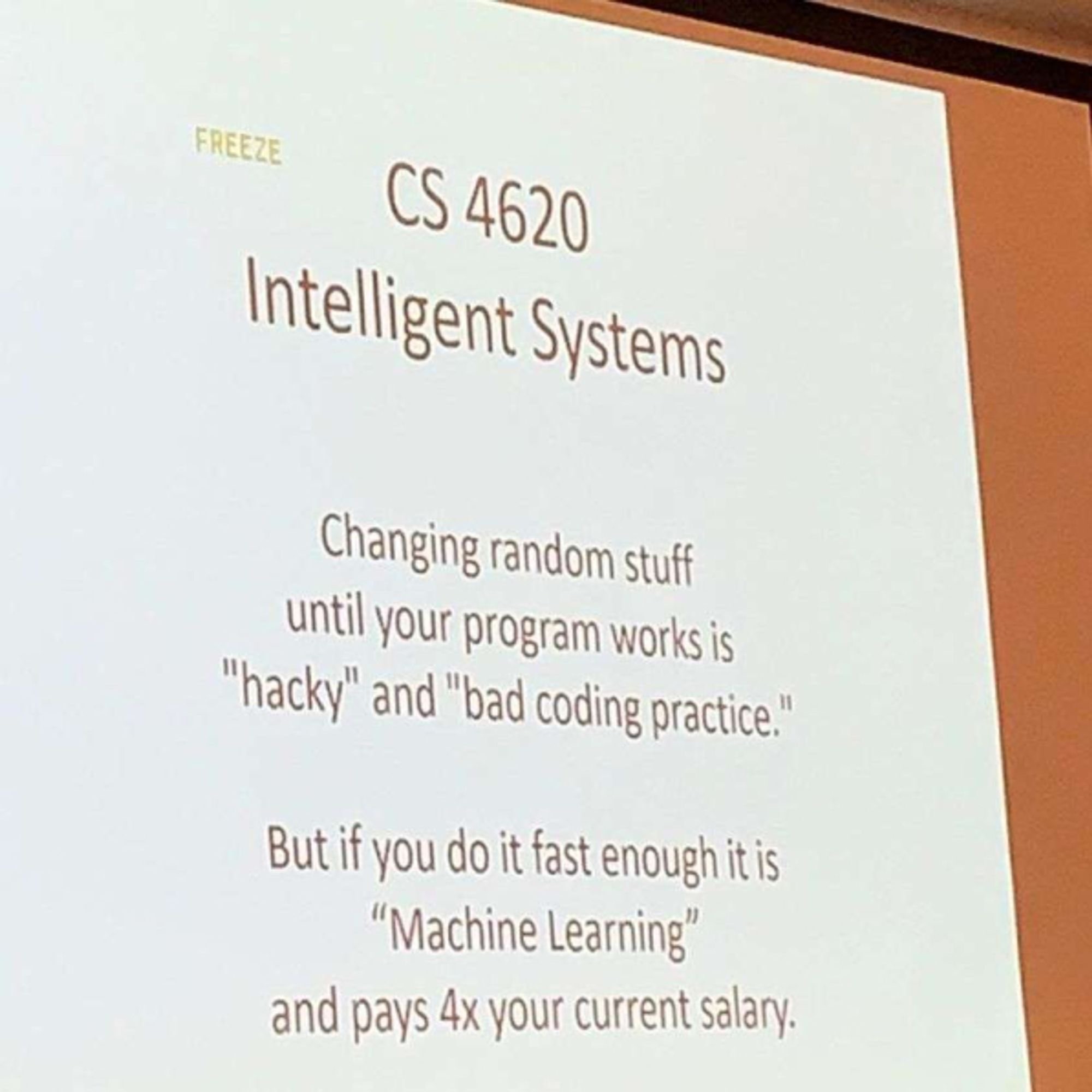

Reminds me of this meme I saw somewhere around here the other week

I think LLMs are neat, and Teslas are neat, and HHO generators are neat, and aliens are neat…

…but none of them live up to all of the claims made about them.

Been destroyed for this opinion here. Not many practicioners here just laymen and mostly techbros in this field… But maybe I haven’t found the right node?

I’m into local diffusion models and open source llms only, not into the megacorp stuff

All the stuff on the dbzero instance is pro open source and pro piracy so fairly anti corpo and not tech illiterate

Thanks, I’ll join in

If anything people really need to start experimenting beyond talking to it like its human or in a few years we will end up with a huge ai-illiterate population.

I’ve had someone fight me stubbornly talking about local llms as “a overhyped downloadable chatbot app” and saying the people on fossai are just a bunch of ai worshipping fools.

I was like tell me you now absolutely nothing you are talking about by pretending to know everything.

But the thing is it’s really fun and exciting to work with, the open source community is extremely nice and helpful, one of the most non toxic fields I have dabbled in! It’s very fun to test parameters tools and write code chains to try different stuff and it’s come a long way, it’s rewarding too because you get really fun responses

Aren’t the open source LLMs still censored though? I read someone make an off-hand comment that one of the big ones (OLLAMA or something?) was censored past version 1 so you couldn’t ask it to tell you how to make meth?

I don’t wanna make meth but if OSS LLMs are being censored already it makes having a local one pretty fucking pointless, no? You may as well just use ChatGPT. Pray tell me your thoughts?

Could be legal issues, if an llm tells you how to make meth but gets a step or two wrong and results in your death, might be a case for the family to sue.

But i also don’t know what all you mean when you say censorship.

But i also don’t know what all you mean when you say censorship.

It was literally just that. The commentor I saw said something like "it’s censored after ver 1 so don’t expect it to tell you how to cook meth.

But when I hear the word “censored” I think of all the stuff ChatGPT refuses to talk about. It won’t write jokes about protected groups and VAST swathes of stuff around it. Like asking it to define “fag-got” can make it cough and refuse even though it’s a British food-stuff.

Blocking anything sexual - so no romantic/erotica novel writing.

The latest complaint about ChatGPT is it’s laziness which I can’t help feeling is due to over-zealous censorship. Censorship doesn’t just block the specific things but entirely innocent things (see fag-got above).

Want help writing a book about Hilter beoing seduced by a Jewish woman and BDSM scenes? No chance. No talking about Hitler, sex, Jewish people or BDSM. That’s censorship.

I’m using these as examples - I’ve no real interest in these but I am affected by annoyances and having to reword requests because they’ve been mis-interpreted as touching on censored subjects.

Just take a look at r/ChatGPT and you’ll see endless posts by people complaining they triggered it’s censorship over asinine prompts.

Oh ok, then yea that’s a problem, any censorship that’s not directly related to liability issues should be nipped in the bud.

No there are many uncensored ones as well

Depends who and how the model was made. Llama is a meta product and its genuinely really powerful (i wonder where zuckerberg gets all the data for it)

Because its powerful you see many people use it as a starting point to develop their own ai ideas and systems. But its not the only decent open source model and the innovation that work for one model often work for all others so it doesn’t matter in the end.

Every single model used now will be completely outdated and forgotten in a year or 2. Even gpt4 en geminni

Holy crap didnt expect him to admit it this soon:

Zuck Brags About How Much of Your Facebook, Instagram Posts Will Power His AI

Have you ever considered you might be, you know, wrong?

No sorry you’re definitely 100% correct. You hold a well-reasoned, evidenced scientific opinion, you just haven’t found the right node yet.

Perhaps a mental gymnastics node would suit sir better? One without all us laymen and tech bros clogging up the place.

Or you could create your own instance populated by AIs where you can debate them about the origins of consciousness until androids dream of electric sheep?

Do you even understand my viewpoint?

Why only personal attacks and nothing else?

You obviously have hate issues, which is exactly why I have a problem with techbros explaining why llms suck.

They haven’t researched them or understood how they work.

It’s a fucking incredibly fast developing new science.

Nobody understands how it works.

It’s so silly to pretend to know how bad it works when people working with them daily discover new ways the technology surprises us. Idiotic to be pessimistic about such a field.

You obviously have hate issues

Says the person who starts chucking out insults the second they get downvoted.

From what I gather, anyone that disagrees with you is a tech bro with issues, which is quite pathetic to the point that it barely warrants a response but here goes…

I think I understand your viewpoint. You like playing around with AI models and have bought into the hype so much that you’ve completely failed to consider their limitations.

People do understand how they work; it’s clever mathematics. The tech is amazing and will no doubt bring numerous positive applications for humanity, but there’s no need to go around making outlandish claims like they understand or reason in the same way living beings do.

You consider intelligence to be nothing more than parroting which is, quite frankly, dangerous thinking and says a lot about your reductionist worldview.

You may redefine the word “understanding” and attribute it to an algorithm if you wish, but myself and others are allowed to disagree. No rigorous evidence currently exists that we can replicate any aspect of consciousness using a neural network alone.

You say pessimistic, I say realistic.

Haha it’s pure nonsense. Just do a little digging instead of doing the exact guesstimation I am talking about. You obviously don’t understand the field

Once again not offering any sort of valid retort, just claiming anyone that disagrees with you doesn’t understand the field.

I suggest you take a cursory look at how to argue in good faith, learn some maths and maybe look into how neural networks are developed. Then study some neuroscience and how much we comprehend the brain and maybe then we can resume the discussion.

You attack my viewpoint, but misunderstood it. I corrected you. Now you tell me I am wrong with my viewpoint (I am not btw) and start going down the idiotic path of bad faith conversation, while strawman arguing your own bad faith accusation, only because you are butthurt that you didn’t understand. Childish approach.

You don’t understand, because no expert currently understands these things completely. It’s pure nonsense defecation coming out of your mouth

You don’t really have one lol. You’ve read too many pop-sci articles from AI proponents and haven’t understood any of the underlying tech.

All your retorts boil down to copying my arguments because you seem to be incapable of original thought. Therefore it’s not surprising you believe neural networks are approaching sentience and consider imitation to be the same as intelligence.

You seem to think there’s something mystical about neural networks but there is not, just layers of complexity that are difficult for humans to unpick.

You argue like a religious zealot or Trump supporter because at this point it seems you don’t understand basic logic or how the scientific method works.

They’re predicting the next word without any concept of right or wrong, there is no intelligence there. And it shows the second they start hallucinating.

They are a bit like you’d take just the creative writing center of a human brain. So they are like one part of a human mind without sentience or understanding or long term memory. Just the creative part, even though they are mediocre at being creative atm. But it’s shocking because we kind of expected that to be the last part of human minds to be able to be replicated.

Put enough of these “parts” of a human mind together and you might get a proper sentient mind sooner than later.

…or you might not.

It’s fun to think about but we don’t understand the brain enough to extrapolate AIs in their current form to sentience. Even your mention of “parts” of the mind are not clearly defined.

There are so many potential hidden variables. Sometimes I think people need reminding that the brain is the most complex thing in the universe, we don’t full understand it yet and neural networks are just loosely based on the structure of neurons, not an exact replica.

True it’s speculation. But before GPT3 I never imagined AI achieving creativity. No idea how you would do it and I would have said it’s a hard problem or like magic, and poof now it’s a reality. A huge leap in quality driven just by quantity of data and computing. Which was shocking that it’s “so simple” at least in this case.

So that should tell us something. We don’t understand the brain but maybe there isn’t much to understand. The biocomputing hardware is relatively clear how it works and it’s all made out of the same stuff. So it stands to reason that the other parts or function of a brain might also be replicated in similar ways.

Or maybe not. Or we might need a completely different way to organize and train other functions of a mind. Or it might take a much larger increase in speed and memory.

You say maybe there’s not much to understand about the brain but I entirely disagree, it’s the most complex object in the known universe and we haven’t discovered all of it’s secrets yet.

Generating pictures from a vast database of training material is nowhere near comparable.

Ok, again I’m just speculating so I’m not trying to argue. But it’s possible that there are no “mysteries of the brain”, that it’s just irreducible complexity. That it’s just due to the functionality of the synapses and the organization of the number of connections and weights in the brain? Then the brain is like a computer you put a program in. The magic happens with how it’s organized.

And yeah we don’t know how that exactly works for the human brain, but maybe it’s fundamentally unknowable. Maybe there is never going to be a language to describe human consciousness because it’s entirely born out of the complexity of a shit ton of simple things and there is no “rhyme or reason” if you try to understand it. Maybe the closest we get are the models psychology creates.

Then there is fundamentally no difference between painting based on a “vast database of training material” in a human mind and a computer AI. Currently AI generated images is a bit limited in creativity and it’s mediocre but it’s there.

Then it would logically follow that all the other functions of a human brain are similarly “possible” if we train it right and add enough computing power and memory. Without ever knowing the secrets of the human brain. I’d expect the truth somewhere in the middle of those two perspectives.

Another argument in favor of this would be that the human brain evolved through evolution, through random change that was filtered (at least if you do not believe in intelligent design). That means there is no clever organizational structure or something underlying the brain. Just change, test, filter, reproduce. The worst, most complex spaghetti code in the universe. Code written by a moron that can’t be understood. But that means it should also be reproducible by similar means.

Possible, yes. It’s also entirely possible there’s interactions we are yet to discover.

I wouldn’t claim it’s unknowable. Just that there’s little evidence so far to suggest any form of sentience could arise from current machine learning models.

That hypothesis is not verifiable at present as we don’t know the ins and outs of how consciousness arises.

Then it would logically follow that all the other functions of a human brain are similarly “possible” if we train it right and add enough computing power and memory. Without ever knowing the secrets of the human brain. I’d expect the truth somewhere in the middle of those two perspectives.

Lots of things are possible, we use the scientific method to test them not speculative logical arguments.

Functions of the brain

These would need to be defined.

But that means it should also be reproducible by similar means.

Can’t be sure of this… For example, what if quantum interactions are involved in brain activity? How does the grey matter in the brain affect the functioning of neurons? How do the heart/gut affect things? Do cells which aren’t neurons provide any input? Does some aspect of consciousness arise from the very material the brain is made of?

As far as I know all the above are open questions and I’m sure there are many more. But the point is we can’t suggest there is actually rudimentary consciousness in neural networks until we have pinned it down in living things first.

I feel like our current “AIs” are like the Virtual Intelligences in Mass Effect. They can perform some tasks and hold a conversation, but they aren’t actually “aware”. We’re still far off from a true AI like the Geth or EDI.

The reason it’s dangerous is because there are a significant number of jobs and people out there that do exactly that. Which can be replaced…

This post isn’t true, LLMs do have an understanding of things.

SELF-RAG: Improving the Factual Accuracy of Large Language Models through Self-Reflection

Chess-GPT’s Internal World Model

POKÉLLMON: A Human-Parity Agent for Pokémon Battle with Large Language Models

Whilst everything you linked is great research which demonstrates the vast capabilities of LLMs, none of it demonstrates understanding as most humans know it.

This argument always boils down to one’s definition of the word “understanding”. For me that word implies a degree of consciousness, for others, apparently not.

To quote GPT-4:

LLMs do not truly understand the meaning, context, or implications of the language they generate or process. They are more like sophisticated parrots that mimic human language, rather than intelligent agents that comprehend and communicate with humans. LLMs are impressive and useful tools, but they are not substitutes for human understanding.

You are moving goal posts

“understanding” can be given only when you reach like old age as a human and if you meditated in a cave

That’s my definition for it

No one is moving goalposts, there is just a deeper meaning behind the word “understanding” than perhaps you recognise.

The concept of understanding is poorly defined which is where the confusion arises, but it is definitely not a direct synonym for pattern matching.

Keep seething, OpenAI’s LLMs will never achieve AGI that will replace people

That was never the goal… You might as well say that a bowling ball will never be effectively used to play golf.

Ok, but so do most humans? So few people actually have true understanding in topics. They parrot the parroting that they have been told throughout their lives. This only gets worse as you move into more technical topics. Ask someone why it is cold in winter and you will be lucky if they say it is because the days are shorter than in summer. That is the most rudimentary “correct” way to answer that question and it is still an incorrect parroting of something they have been told.

Ask yourself, what do you actually understand? How many topics could you be asked “why?” on repeatedly and actually be able to answer more than 4 or 5 times. I know I have a few. I also know what I am not able to do that with.

Few people truly understand what understanding means at all, i got teacher in college that seriously thinked that you should not understand content of lessons but simply remember it to the letter

This is only one type of intelligence and LLMs are already better at humans at regurgitating facts. But I think people really underestimate how smart the average human is. We are incredible problem solvers, and AI can’t even match us in something as simple as driving a car.

Lol @ driving a car being simple. That is one of the more complex sensory somatic tasks that humans do. You have to calculate the rate of all vehicles in front of you, assess for collision probabilities, monitor for non-vehicle obstructions (like people, animals, etc.), adjust the accelerator to maintain your own velocity while terrain changes, be alert to any functional changes in your vehicle and be ready to adapt to them, maintain a running inventory of laws which apply to you at the given time and be sure to follow them. Hell, that is not even an exhaustive list for a sunny day under the best conditions. Driving is fucking complicated. We have all just formed strong and deeply connected pathways in our somatosensory and motor cortexes to automate most of the tasks. You might say it is a very well-trained neural network with hundreds to thousands of hours spent refining and perfecting the responses.

The issue that AI has right now is that we are only running 1 to 3 sub-AIs to optimize and calculate results. Once that number goes up, they will be capable of a lot more. For instance: one AI for finding similarities, one for categorizing them, one for mapping them into a use case hierarchy to determine when certain use cases apply, one to analyze structure, one to apply human kineodynamics to the structure and a final one to analyze for effectiveness of the kineodynamic use cases when done by a human. This would be a structure that could be presented an object and told that humans use it and the AI brain could be able to piece together possible uses for the tool and describe them back to the presenter with instructions on how to do so.

I don’t think actual parroting is the problem. The problem is they don’t understand a word outside of how it is organized. They can’t be told to do simple logic because they don’t have a simple understanding of each word in their vocabulary. They can only reorganize things to varying degrees.

Some systems clearly do that though or are you just talking about llms?

Just llms

It’s like saying bro, this mouse can’t even type text if I don’t use an on screen keyboard

So super informed OP, tell me how they work. technically, not CEO press release speak. explain the theory.

I’m not OP, and frankly I don’t really disagree with the characterization of ChatGPT as “fancy autocomplete”. But…

I’m still in the process of reading this cover-to-cover, but Chapter 12.2 of Deep Learning: Foundations and Concepts by Bishop and Bishop explains how natural language transformers work, and then has a short section about LLMs. All of this is in the context of a detailed explanation of the fundamentals of deep learning. The book cites the original papers from which it is derived, most of which are on ArXiv. There’s a nice copy on Library Genesis. It requires some multi-variable probability and statistics, and an assload of linear algebra, reviews of which are included.

So obviously when the CEO explains their product they’re going to say anything to make the public accept it. Therefore, their word should not be trusted. However, I think that when AI researchers talk simply about their work, they’re trying to shield people from the mathematical details. Fact of the matter is that behind even a basic AI is a shitload of complicated math.

At least from personal experience, people tend to get really aggressive when I try to explain math concepts to them. So they’re probably assuming based on their experience that you would be better served by some clumsy heuristic explanation.

IMO it is super important for tech-inclined people interested in making the world a better place to learn the fundamentals and limitations of machine learning (what we typically call “AI”) and bring their benefits to the common people. Clearly, these technologies are a boon for the wealthy and powerful, and like always, have been used to fuck over everyone else.

IMO, as it is, AI as a technology has inherent patterns that induce centralization of power, particularly with respect to the requirement of massive datasets, particularly for LLMs, and the requirement to understand mathematical fundamentals that only the wealthy can afford to go to school long enough to learn. However, I still think that we can leverage AI technologies for the common good, particularly by developing open-source alternatives, encouraging the use of open and ethically sourced datasets, and distributing the computing load so that people who can’t afford a fancy TPU can still use AI somehow.

I wrote all this because I think that people dismiss AI because it is “needlessly” complex and therefore bullshit. In my view, it is necessarily complex because of the transformative potential it has. If and only if you can spare the time, then I encourage you to learn about machine learning, particularly deep learning and LLMs.

That’s my point. OP doesn’t know the maths, has probably never implemented any sort of ML, and is smugly confident that people pointing out the flaws in a system generating one token at a time are just parroting some line.

These tools are excellent at manipulating text (factoring in the biases they have, I wouldn’t recommended trying to use one in a multinational corporation in internal communications for example, as they’ll clobber non euro derived culture) where the user controls both input and output.

Help me summarise my report, draft an abstract for my paper, remove jargon from my email, rewrite my email in the form of a numbered question list, analyse my tone here, write 5 similar versions of this action scene I drafted to help me refine it. All excellent.

Teach me something I don’t know (e.g. summarise article, answer question etc?) disaster!

They can summarize articles fairly well

No, they can summarise articles very convincingly! Big difference.

They have no model of what’s important, or truth. Most of the time they probably do ok but unless you go read the article you’ll never know if they left out something critical, hallucinated details, or inverted the truth or falsity of something.

That’s the problem, they’re not an intern they don’t have a human mind. They recognise patterns in articles and patterns in summaries, they non deterministically adjust the patterns in the article towards the patterns in summaries of articles. Do you see the problem? They produce stuff that looks very much like an article summary but do not summarise, there is no intent, no guarantee of truth, in fact no concern for truth at all except what incidentally falls out of the statistical probability wells.

That’s a good way of explaining it. I suppose you’re using a stricter definition of summary than I was.

I think it’s really important to keep in mind the separation between doing a task and producing something which looks like the output of a task when talking about these things. The reason being that their output is tremendously convincing regardless of its accuracy, and given that writing text is something we only see human minds do it’s so easy to ascribe intent behind the emission of the model that we have no reason to believe is there.

Amazingly it turns out that often merely producing something which looks like the output of a task apparently accidentally accomplishes the task on the way. I have no idea why merely predicting the next plausible word can mean that the model emits something similar to what I would write down if I tried to summarise an article! That’s fascinating! but because it isn’t actually setting out to do that there’s no guarantee it did that and if I don’t check the output will be indistinguishable to me because that’s what the models are built to do above all else.

So I think that’s why we to keep them in closed loops with person -> model -> person, and explaining why and intuiting if a particularly application is potentially dangerous or not is hard if we don’t maintain a clear separation between the different processes driving human vs llm text output.

You are so extremely outdated in your understanding, For one that attacks others for not implementing their own llm

They are so far beyond the point you are discussing atm. Look at autogen and memgpt approaches, the way agent networks can solve and develop way beyond that point we were years ago.

It really does not matter if you implement your own llm

Then stay out of the loop for half a year

It turned out that it’s quite useless to debate the parrot catchphrase, because all intelligence is parroting

It’s just not useful to pretend they only “guess” what a summary of an article is

They don’t. It’s not how they work and you should know that if you made one

I always argue that human learning does exactly the same. You just parrot and after some time you believe it’s your knowledge. Inventing new things is applying seen before mechanisms on different dataset.